In the prelude to How We Became Posthuman, N. Katherine Hayles describes two different variations on the Turing test.

The most famous one, the one many of us may know, involves a person

using some kind of computerized chat interface to talk to either a

computer, or a human in another room. It is the task of the test subject

to determine, from conversation, whether their interlocutor is human or

machine. Passing the Turing test has long been seen as one of the holy

grails of artificial intelligence. When computers are able to pass as

human, the argument goes, one of the distinctions between humans and

computers dissolves.

In the prelude to How We Became Posthuman, N. Katherine Hayles describes two different variations on the Turing test.

The most famous one, the one many of us may know, involves a person

using some kind of computerized chat interface to talk to either a

computer, or a human in another room. It is the task of the test subject

to determine, from conversation, whether their interlocutor is human or

machine. Passing the Turing test has long been seen as one of the holy

grails of artificial intelligence. When computers are able to pass as

human, the argument goes, one of the distinctions between humans and

computers dissolves.Hayles also describes another Turing test. This one starts in the same way as the previous, with a human participant talking to someone in another room through a computerized chat interface. But in this one, the discussion partner on the other side is definitely human. The goal of the participant is, instead, to determine whether their conversation partner is male or female. If this second Turing test has similar stakes to the first, Hayles asks, does an ability to fool the participant negate the gender of the human on the other side?

One of the crucial questions raised by the gender Turing test, to my mind, is about the role of rigid, socially defined gender binaries. The test is predicated on an understanding that there are two genders, male and female, and that they each behave in a certain way. If we choose not to take this idea for granted, and instead decide that there is a vast spectrum of behaviour and appearance running from that which completely and stereotypically matches a gender, to that which is entirely opposed, the gendered Turing Test becomes impossible. How do we decide, from a textual discussion, what gender someone is if we do not require all people to adhere to a strict social script about their gender?

That second Turing test does do some valuable work for us: it highlights the importance of the visual in making judgments about gender. Many people feel entitled to understand another person's gender, based on their appearance. We sometimes hear the distressed question, whispered behind hands, "Is that a man or a woman?" In a space where those visual cues are not required, where we can present ourselves textually, or in ambiguous photos, appearance--that popular tool for determining gender--is not available.

There's a huge spectrum of ways that gender is represented, discussed, made an issue, or turned into infrastructure on the internet. Different platforms construct gender as an issue of varying importance. In some software development communities (on mailing lists, in IRC), it's generally considered impolite to ask people for personal details that they're not readily volunteering. A comment raised by this is the idea that many women don't get noticed or counted because they don't mention or make obvious their gender, because the default or un-gendered stated is considered to be male. If someone does not make it explicitly clear that she is a woman, she is assumed to be a man. If someone makes it explicitly clear that they are something other than simply a woman or man, it starts a discussion, which may or may not be welcome to the person who has accidentally instigated it. So on one side of this spectrum, there's communities where disclosing gender is not structurally necessary and speculation is entirely a private activity by individuals; on the other side of the spectrum, there are platforms like Facebook, where including a gender is a required activity in profile building, and where the default is man or woman, unless you choose to start writing in an answer, and then there's an authorized list of possibilities. Gender is built into the bedrock of Facebook. We take for granted that we can find out what gender someone is on Facebook. Moreso, we take for granted that we can find out what gender someone is, in general.

Using the analogy of the Turing test, I've devised a workshop which explores how we parse gender and gender representation when others are divorced from our bodies. I am trying to make legible issues around the gender binary and its supporting structures. There are a few concepts to work with in service of that goal: the gender Turing test and the idea of judging gender based on text-based interaction; technical systems which occupy different places on the spectrum of gender disclosure and, as a subset of that, the attitude of those systems to the inclusion of descriptors other than male or female; the ever-present comparison of the gender binary to binary, and the real spectrum of gender alignment as more like analog.

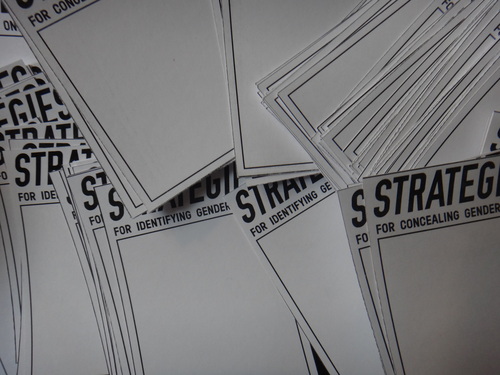

The workshop is structured around a kind of Turing test, in this case called the Strategies game. The Strategies game is a deliberately obfuscatory Turing test. Groups take on the role of either the agent trying to ascertain gender, or the agent trying to hide gender. All groups start by exploring their own cultural assumptions about gender and listing things that they see as essential signifiers. Some groups devote themselves to defining strategies for concealing gender online, others devise strategies for identifying gender. The agents trying to ascertain gender use their list to devise a system of winkling the information out of their opposing agent. The agents trying to hide their gender do similarly, attempting to devise systems which prevent the other side from ascertaining their gender. Strategies are listed on cards, which look like the cards used in card strategy games, or sports trading cards. Each strategy, whether its goal is to be revelatory or obfuscatory, goes on a card. Once the card-development session is done, groups nominate cards to go into a deck which will be used by one of the players in the Turing test.

Once the decks are made, based on educated guesses by the groups and each group contributing an equal number of cards to one of the two decks, everyone in the room closes their eyes. A volunteer is sought to play the role of the identifier. They are taken to a chair in the front of the room, facing a projection screen, and facing away from the rest of the group. With eyes still closed, another volunteer is sought, to be the concealer. They are taken out of the room, to a separated area equipped with a computer. The two players connect to some kind of chat client or collaborative editing platform (so far, I've used etherpad). Taking alternating turns, the two players use strategies listed on their respective decks of cards. Each strategy can only be used once. At the end of the session, when both players are out of cards, the identifier is asked if they believe they can identify the gender of the concealer.

In asking participants to consider strategies for concealing and identifying gender, and in playing out a modified Turing test, this workshop tackles ideas of gender binaries, cultural gender scripts and requirements, the violence of forced disclosure, and the differing conditions under which we identify ourselves as gendered.